Artificial intelligence

From Satellites to the Great Wall, Intel Takes “AI Everywhere”

Artificial intelligence computer chips are becoming increasingly important for accelerating AI applications. Last year, semiconductor giant Intel unveiled a range of new AI chips to cement its position among the leading players. Berenice Baker hears from Intel’s general manager of Artificial Intelligence Products Group, Naveen Rao, about how the US firm plans to take “AI everywhere” with its new hardware

Intel’s slogan has evolved over the years from 1991’s “Intel inside” through a flirtation with “Leap ahead” and the mildly baffling “Sponsors of tomorrow” to a return to form with “Look inside”. At a recent small-but-perfectly-formed AI summit in San Francisco, the company revealed its ambition to take “AI everywhere” with its new chipset that supports the increasing demands of AI processing from data centres to the edge.

No one would have believed in the last years of the twentieth century that AI would have now become so pervasive; solutions in use today could barely have been imagined 10 years ago. Opening the event, vice president and general manager of Intel’s Artificial Intelligence Products Group Naveen Rao Intel set out a wide variety of scenarios where Intel’s AI chips have been called in to solve real-world problems.

Intel’s were the first AI chips in space aboard a European Space Agency (ESA) Earth observation satellite, helping the spacecraft decide which images and data out of the terabytes it collects every day to send to ground stations to trigger flood response. Intel AI technology is helping preserve the Great Wall of China by processing imagery collected by drones to create a 3D model of the landmark and automatically detect structural flaws.

Image courtesy of Intel

Using AI for good

The chips can be harnessed to perform deep learning research and neural network training to automate drug discovery. Intel partnered with Brazil-based Hoobox robotics to create a wheelchair controlled by facial expressions. Microsoft’s Bing search engine uses Intel technology to deliver faster, more intelligent searches.

And perhaps most unexpectedly, Intel AI is even helping protect endangered species. Non-profit RESOLVE uses Intel Movidius Vision Processing Units (VPUs) in its TrailGuard camera-based anti-poaching system for national parks to detect intruders and arrest poachers before they kill wildlife.

“AI will infuse everything, so we’ve put it everywhere.”

It’s paying off – Intel expects to have generated $3.5bn in revenue from its AI business in 2019, and while the move to edge processing is driving many innovations, enterprise computing remains its core business.

Rao explained: “There is no single approach, budget, chip or system; data readiness, expertise and use case determine the correct AI solution. AI will infuse everything, so we’ve put it everywhere.”

Rao said Intel AI technology can be found in the CPU, GPU, FPGA and ASIC, explaining that it starts with the CPU, the “foundation of AI”. Intel’s multipurpose GPU chip XEON has a background of being used for AI, and its DL [deep learning] Boost Vector Neural Net Instructions (VNNI) boosts inference performance by 3x.

Addressing the slowdown of Moore’s Law

Of course, no discussion of ever-increasing computer power can be complete without a mention of Moore’s Law, devised by former Intel CEO Gordon Moore in 1965. He hypothesised that the number of transistors on an integrated circuit chip doubles every two years. While this law has held true for over 50 years, most forecasters expect it to end in 2025.

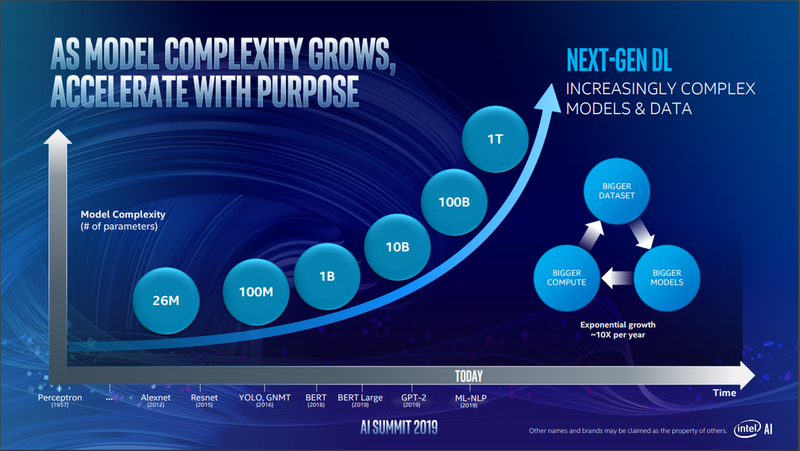

“Over the last 20 years we’ve gotten a lot better at storing data,” Rao said. “We have bigger data sets than ever before. Moore’s Law has led to much greater compute capability in a single place. And that allowed us to build better and bigger neural network models. This is kind of a virtuous cycle and it’s opened up new capabilities.”

“No single tool will address every company’s needs; it’s an engineering problem that we have to build to realise these capabilities.”

But he warned a trend to be aware of is the number of parameters - or complexity of the model - is increasing on the order of 10x year on year.

“This is exponential and is something that outpaces any other technology transition I’ve ever see,” he said. “10 x year-on-year means that we’re at 10 billion parameters today, we’ll be at 100 billion tomorrow, and 10 billion maxes out what we can do on current hardware. It means we’re going to have to think of more and different solutions going forward. No single tool will address every company’s needs; it’s an engineering problem that we have to build to realise these capabilities.

Rao said that achieving this is going to take a lot more compute. The AI industry started by taking things that were built for other purposes and reusing them for AI; the next step is to build tools specifically for this problem. To this end, Rao presented Intel’s latest Nervana Neural Network Processor (NNP) range for training (NNP-T1000) and inference (NNP-I1000).

“AI inference is an extremely important segment of the market and is how intelligence gets out to the world,” he said. “NNP is built from ground-up to lead in three things: to optimise performance, performance per Watt and compute density.”

Image courtesy of Intel

Optimising deep learning training

The Intel Nervana NNP-T application-specific integrated circuit (ASIC) was designed from scratch to optimise deep learning training and incorporates features to solve for large models without the overheads more general-purpose chips would need to support legacy technology. The Nervana NNP-I supports the rapidly-growing needs of enterprise-scale inference cycle volumes.

They are both energy efficient, targeting the power demands previous AI solutions using fewer specialist processors, and can scale up from small clusters up to the largest pod of supercomputers, and have been snapped up by heavyweight AI customers including Baidu and Facebook.

“‘Intel inside’ will indeed become synonymous with ‘AI everywhere’”

Facebook director of AI system co-design Misha Smelyanskiy said: “We are excited to be working with Intel to deploy faster and more efficient inference compute with the Intel Nervana Neural Network Processor for inference (NNP-I) and to extend support for our state-of-the-art deep learning compiler, Glow, to the NNP-I.”

As seen in the introductory case studies, many AI applications are dependent on image processing. To support future demands, Intel is launching its AI-powered next-generation Intel Movidius VPU in the first half of 2020, which the company says incorporates architectural advances that are expected to deliver performance of more than 10x the inference performance of the previous generation without sacrificing power efficiency.

As we enter the 2020s, Intel has set its sights on dominating the near-future AI landscape. If the company has its way, “Intel inside” will indeed become synonymous with “AI everywhere”.

Back to top